Sharing Our Passion for Technology

& Continuous Learning

How We Saved $10 Million A Year

Most software projects are pretty simple. We take something off the shelf, or a couple off the shelf components, and do a little bit of customization for a client's specific needs. This both saves time and is generally the way to do right by the client. Using off the shelf components means that when our contract ends, the client has an easier time finding people who can hit the ground running. Every once in a while, however, we get a project that requires a deeper understanding of the domain we're working in and a more bespoke solution. I'm lucky enough to be on such a project right now.

We run an API that sits on top of a number of PetaBytes of parquet filled with geospatial data and measurements. Our contract with our users is a JSON-based DSL that allows users to describe arbitrary subsets of that data and apply arbitrary transformations to it. We need to resolve that data, rasterize it, and either render it to a picture or summarize it with a hard, 30 second limit.

We've done a lot of good work on this project, but in this post, I'm going to talk about the design change that ended up dominating everything. It almost single handedly reduced our AWS spend by $10 Million dollars a year.

Old Call Pattern

Before we get into the change, I need to explain a little bit about this application, so we can talk about exactly why the original system cost so much. Our project renders/summarizes slippy map tiles. To put it succinctly, the entire world is a level 0 tile, and each tile is made up of 4 tiles at a lower level (e.g. the level 0 tile is made of 4 level 1 tiles, each of those level 1 tiles are made of 4 level 2 tiles, etc). Our data is partitioned at level 17, because this lines up with how big our average dataset is geographically.

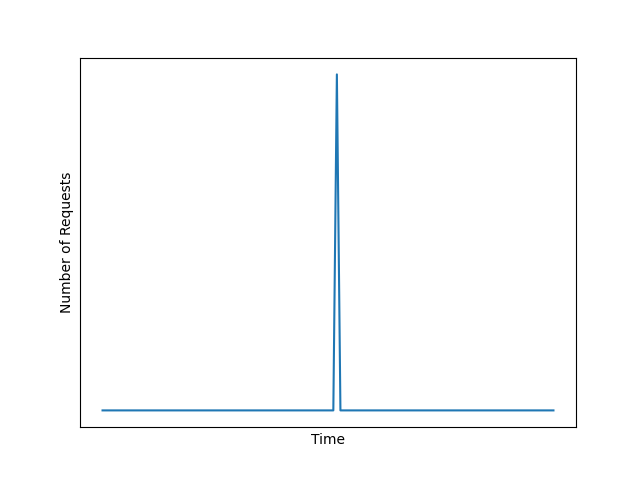

The original API zoomed out until it had a reasonable number of requests to make. It would make the request to a second API, dedicated to building these tiles. If this request wasn't for something bigger than a level 17 tile, would split it apart into a lower map tile level and then make a bunch of requests to itself and then re-assemble the output. It was basically a distributed recursive function call over the network. It was pretty elegant. The one problem is that if some data landed on the intersection of bigger tiles, you ended up with a lot of requests. By a lot, I mean tens of thousands of requests would just pop out of nowhere. Our call graph looked roughly like this:

Yeah, that's not sustainable. Before my time on the project, they used lambdas to generate the tiles, but even Lambda wouldn't scale quickly enough to handle some of the more perverse cases. What they ended up with was a cluster of ~410 EC2 servers. These were powerful machines, each one of them a c6i.24xlarge server, and with the 30 second time limit there just wasn't enough time to scale up new EC2 boxes during the request. If we wanted to serve the requests that came in, we had to have those servers running all the time. This was expensive.

New Call Pattern

We eventually re-wrote that original API. It had some issues that made it impossible to work with, but that's a story for another day. During the re-write, I made two changes to the call pattern.

- I grabbed a metadata file that was originally being requested by the dedicated Tile API.

- I used this metadata file to compute which level 17 tiles had data, and made the requests for those tiles directly.

This wasn't perfect, but it had some nice properties. Large geographic datasets still required a couple thousand calls, but ones that happened to sit on imaginary lines no longer did. This is both easier to understand, and as it turns out significantly easier to deal with than the explosive requests we had been dealing with. With this call pattern change, and a heavy amount of optimization work, we were able to eliminate over 400 servers from our cluster. We're now running ~10 servers in our production cluster. That's really it. Removing 400 servers is enough to save $10,000,000 each year.

$4.08 / c6i.24xlarge * 400 c6i.24xlarge * 24 hours / day * 30 days / month * 12 months / year = $14,100,480 / year

This one change to our call pattern saved our team over $10 Million per year. Closer to $15 Million actually, but who's counting? Well, our clients are counting. According to Gartner, a top research and consulting firm, between 80-90% of CIO’s have been tasked with cutting costs, and doing more with less. The bottom line really is the bottom line. I've done a lot of cool things for this project. I've written raw CUDA to process our rasters, I've written my own GeoTIFF serialization/de-serialization. Nothing comes close to this one change in call pattern, a change that I wouldn't have been able to make if I hadn't taken the time to understand the system as a whole, and what exactly was causing our client's pain points.

That's what we do here at Source Allies, we specialize in solving impossible challenges. We create innovative solutions that drive real impact, whether you need strategic guidance, technical expertise, or custom-built solutions. Give us a call to see how we can turn your challenges into opportunities.